Accessing the Software Heritage Archive via the InterPlanetary FileSystem

Building a bridge to disseminate the Software Heritage archive over IPFS.

Software Heritage is a non-profit initiative with a mission to preserve open source software code. It houses the largest public archive of software source code in the world. IPFS, developed by Protocol Labs, provides a decentralized, peer-to-peer protocol designed to replace centralized internet infrastructure.

These two ecosystems are a natural match for a variety of reasons. They share the goal of preserving access to cultural commons, one by archiving software repositories and the other by distributing them. Behind the scenes, they also share the technical bases of content addressing and Merkle DAGs. Connecting them together is like connecting a warehouse (SWH) and a railroad (IPFS).

Last year, the NLnet Foundation accepted Software Heritage and our proposal for a grant to build a bridge between the centralized Software Heritage (SWH) archive and the distributed IPFS network. That was previously written about on the Software Heritage blog here. Today, we present the result of our work.

The bridge we built in partnership enables IPFS users to access, retrieve, and redistribute SWH archival resources, while leveraging the P2P network to ease the burden of distribution for SWH.

Concept

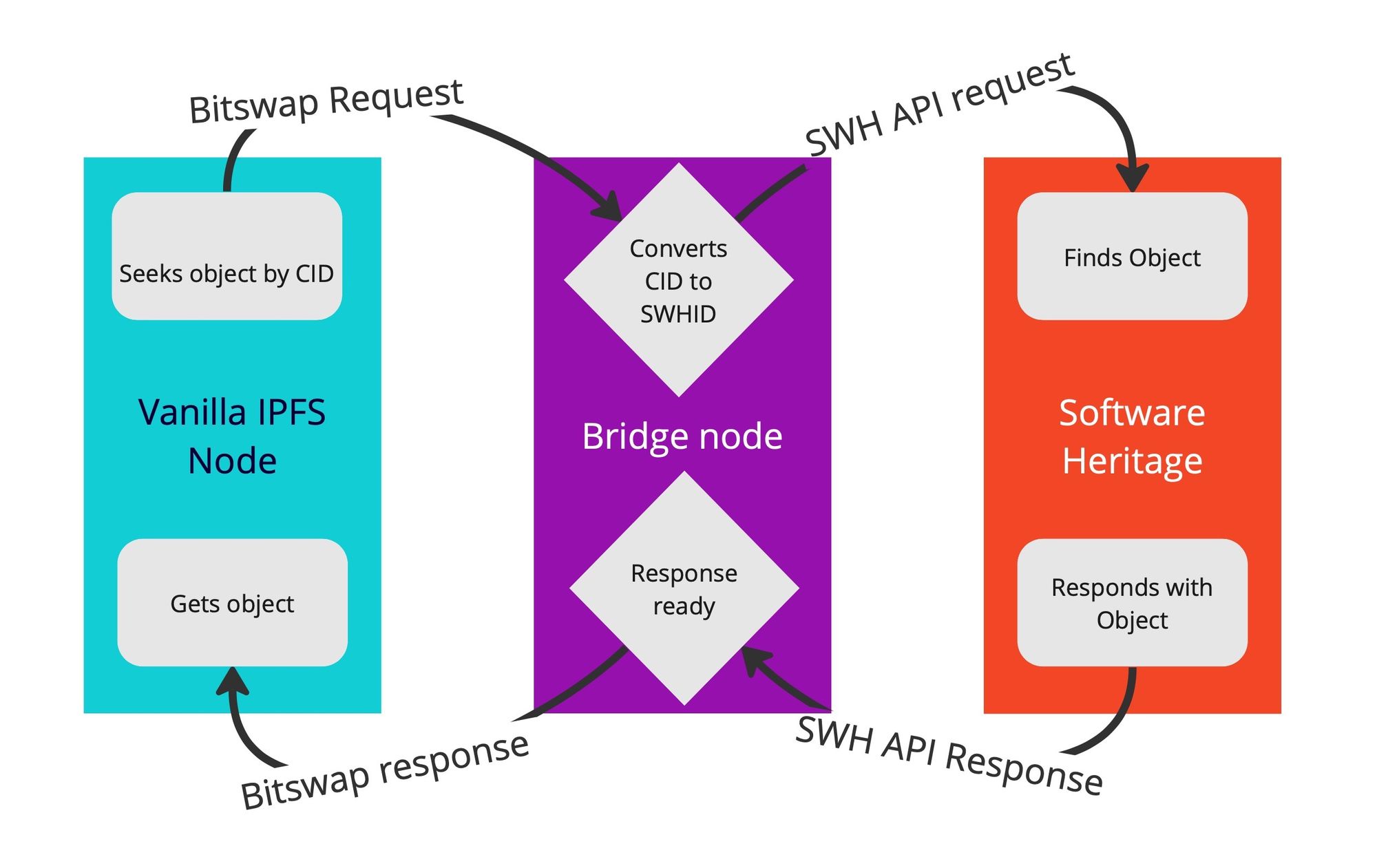

In a request-response flow between IPFS and SWH, the bridge node plugin we've created functions like middleware:

This simple workflow succeeds because both IPFS and SWH understand git hashing, all the way down. This crucial design decision by both parties transforms the process of bridging them from something quite difficult into something so natural the code practically writes itself.

Software Heritage stores all files indexed by git hash, and directories are also stored as git tree objects so they themselves also refer to their children by git hashes. IPFS supports a variety of formats, and git is among them.

The two content-addressed systems are similar enough that IPFS CIDs and SWHIDs can be converted between one another without seeing the actual content referenced by either ID. Not every IPFS CID can be an SWHID, but every SWHID can become an IPFS CID.

Because all parties understand the same content addressing format, data integrity is easy. This simplifies communication and removes the need for fussy translation of identifiers. All data is tamper-proof throughout because content-addressed references cannot be fooled without triggering a hash mismatch.

How would this be used?

Just one IPFS node with our plugins installed is required to bridge IPFS and SWH. Other IPFS nodes requesting objects from the bridge node do not need our plugins or any other extra modifications.

Below is a walkthrough showing the bridge node and a single unmodified “client” node. The latter stands in for the rest of the IPFS network, which is able to connect to the bridge.

Alternative A: Using the Software Heritage's temporary public bridge node

Software Heritage is temporarily deploying a public bridgde node for testing purposes.

While this is up, instead of running a bridge oneself, one can just use this bridge node, making this easier to try out.

The node is at:

/dns4/ipfs.softwareheritage.org/tcp/4001/p2p/12D3KooWBThWcjxdCsXg2jRWoBnBkLyr8wghDArHotApjJZAyW3T

To connect to that public node, one would run:

ipfs swarm connect /dns4/ipfs.softwareheritage.org/tcp/4001/p2p/12D3KooWBThWcjxdCsXg2jRWoBnBkLyr8wghDArHotApjJZAyW3T

We are still including the full instructions of setting up the bridge and a client below in order to best illustrate how this project works, and ensure these instructions remain viable without the ipfs.softwareheritage.org node being up. But for those looking to try this out in the next few weeks, just using the public bridge will be much easier!

Alternative B: Setting up the bridge node

The simplest way to build the bridge node is to use the Nix recipe included in the repository. This will build IPFS and our plugins together, all in a single step.

First, one would clone the repo containing our swh-ipfs-go-plugin.

Then, run nix-build in the cloned repo. The results will appear in ./result/bin/.

In the home directory of the built repo, run ./result/bin/ipfs init -e -p swhbridge to create a bridge node configuration folder. That folder is stored in ~/.ipfs.

Now, rename that folder to ./ipfs-bridge by running mv ~/.ipfs ~/.ipfs-bridge.

NB: At this point, running a bridge node requires an auth token for an account with special privileges unavailable to the general public.

Were a user to have an auth token, only one change would be required in the ~/.ipfs-bridge/config file to enable the use of that auth token:

--- a/config

+++ b/config

@@ -11,7 +11,8 @@

"mounts": [

{

"child": {

- "type": "swhbridge"

+ "type": "swhbridge",

+ "auth-token": "<paste token here>"

},

"mountpoint": "/blocks",

"prefix": "swhbridge.datastore",

To start the bridge node's daemon after, one would run the following in the home directory of that repo:

GOLOG_LOG_LEVEL="swh-bridge=info" \

IPFS_PATH=~/.ipfs-bridge/ \

./result/bin/ipfs daemon

With that daemon in place, a second terminal would be needed to begin configuring & operating an IPFS client node.

The IPFS client node

To initialize an IPFS client node from that built repository, run:

IPFS_PATH=~/.ipfs-client/ \

./result/bin/ipfs init

Afterward, open the newly created ~/.ipfs-client/config file. There, to prevent the bridge node and client node colliding, increment the local ports the client accesses by one apiece (e.g., from 4001 to 4002) under the Addresses heading. That would be Swarm, API, and Gateway. One can ignore the ports listed under Bootstrap.

After making these changes, one would start up the client node's daemon by running:

GOLOG_LOG_LEVEL="swhid-debug" \

IPFS_PATH=~/.ipfs-client \

./result/bin/ipfs daemon

Finally, one needs to make sure that it connects to the bridge node.

For the public bridge node, that would be

ipfs swarm connect /dns4/ipfs.softwareheritage.org/tcp/4001/p2p/12D3KooWBThWcjxdCsXg2jRWoBnBkLyr8wghDArHotApjJZAyW3T

For a locally-run bridge node, that would be

ipfs swarm connect /ip4/127.0.0.1/tcp/<port>/p2p/<peer-id>

where <port> is the port you used for the bridge node, and <peer-id> is the identifier for that running instance.

You can find the latter in the Identity.PeerID of the bridge node's config file (~/.ipfs-bridge/config).

A First query

To make a sample GET request across the bridge, with both daemons running, run the following in another terminal:

./result/bin/ipfs dag get \

--output-codec=git-raw \

F0178111494a9ed024d3859793618152ea559a168bbcbb5e2

The output of this command will print the raw bytes of the object. Except for the “blob” and size at the top, which are part of how git encodes files, this is the original human-readable SWH file in your terminal, accessed via the SWH-IPFS bridge.

Having successfully received our result, let’s go back to understand the steps involved.

Further queries

If we look at the bridge daemon’s log output we will see:

… INFO swh-bridge bridge/swh_client.go:42 looking up hash: 94a9ed024d3859793618152ea559a168bbcbb5e2

… INFO swh-bridge bridge/swh_client.go:79 found SWHID swh:1:cnt:94a9ed024d3859793618152ea559a168bbcbb5e2

… INFO swh-bridge bridge/swh_client.go:93 fetching SWHID: swh:1:cnt:94a9ed024d3859793618152ea559a168bbcbb5e2

… INFO swh-bridge bridge/swh_client.go:116 SWHID fetched: swh:1:cnt:94a9ed024d3859793618152ea559a168bbcbb5e2

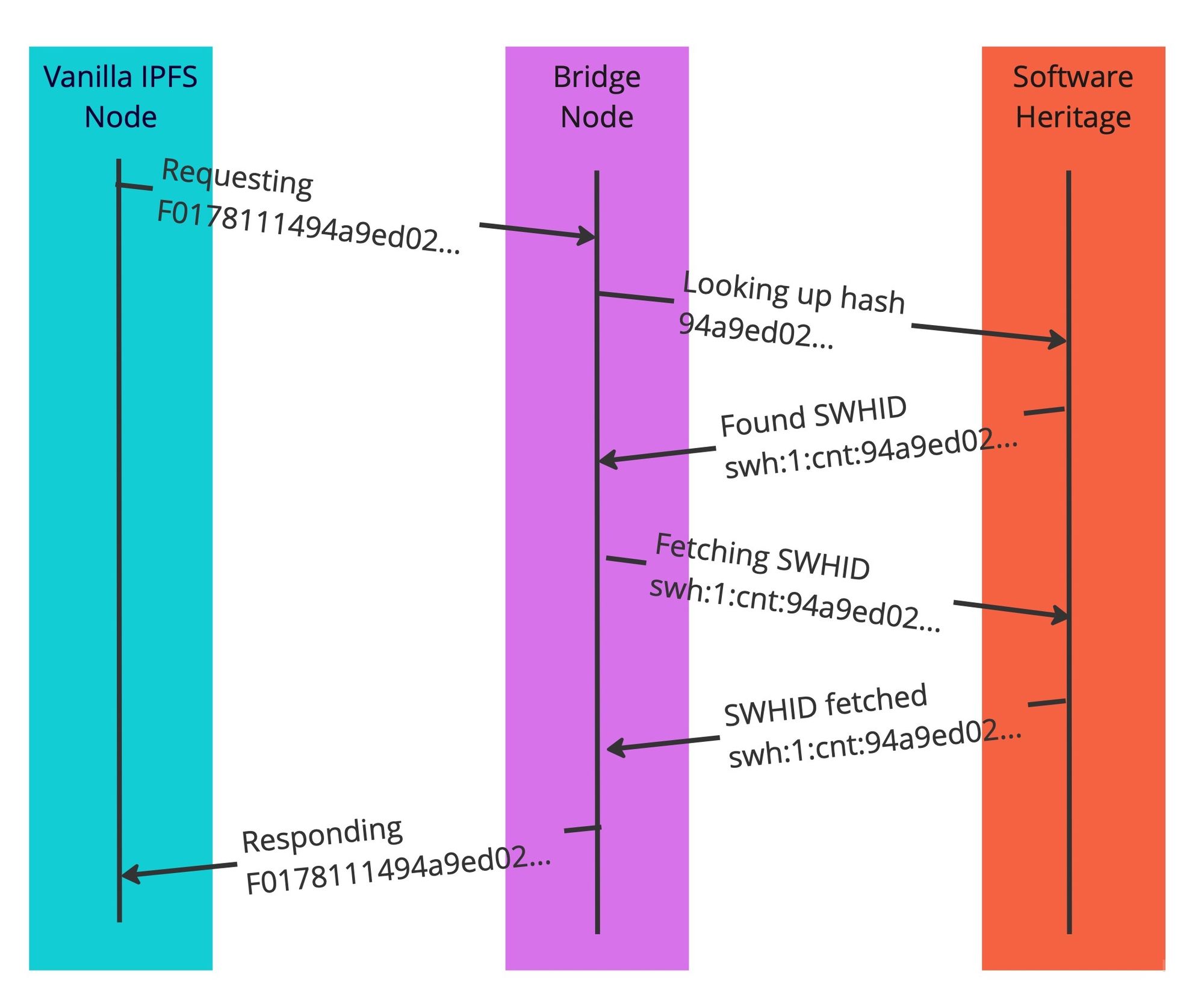

We can diagram what is going on:

If we run the command in the client again, we wouldn’t see the output in the bridge daemon terminal. This is because the client node has cached the result and doesn’t need to fetch it again!

This sort of caching is the foundation of IPFS’s ability to act as a content distribution network.

Likewise if we delete the client node’s storage with rm ~/.ipfs-client/blocks/* and fetch again, we will see the log output in the daemon.

Now, if one were to fetch a directory object by running the following:

$ ./result/bin/ipfs dag get \

f017811141ecc6062e9b02c2396a63d90dfac4d63690e488b | jq

Instead of displaying the raw bytes, we display a JSON representation. IPFS understands this JSON format so we can also query within it:

IPFS_PATH=~/.ipfs-client/ \

./result/bin/ipfs dag get \

f017811141ecc6062e9b02c2396a63d90dfac4d63690e488b/lib | jq

And receive the following output:

{

"hash": {

"/": "baf4bcfesbktctry3zey52d7oitx3nkxtxndwsly"

},

"mode": "40000"

}

Besides files and directories, there are also commit objects:

IPFS_PATH=~/.ipfs-client/ \

./result/bin/ipfs dag get \

f017811141a0dd0088247f9d4e403a460f0f6120184af3e15 | jq

Recursive queries

These have a more interesting JSON format as codecs contain different sorts of things in a more structured way than directories.

Finally, we have SWH snapshot objects, the one non-git type that we mentioned above:

IPFS_PATH=~/.ipfs-client/ \

./result/bin/ipfs dag get \

f01F00311149dcebebe2bb56cabdd536787886d582b762a0376 | jq

As mentioned, these objects are parsed by our plugin and not stock Kubo, but they appear in no way second-class.

Things become most interesting once we follow deeper into the hash. Note the /lib/hash included at the tail of the hash we query here:

IPFS_PATH=~/.ipfs-client/ \

./result/bin/ipfs dag get \

f017811141ecc6062e9b02c2396a63d90dfac4d63690e488b/lib/hash | jq

Now we are telling IPFS in one command to get the parent object and then its child. We can go as deep into such a recursive command as we like:

IPFS_PATH=~/.ipfs-client/ \

./result/bin/ipfs dag get \

f017811141ecc6062e9b02c2396a63d90dfac4d63690e488b/lib/hash/zstd/hash/decompress/hash | jq

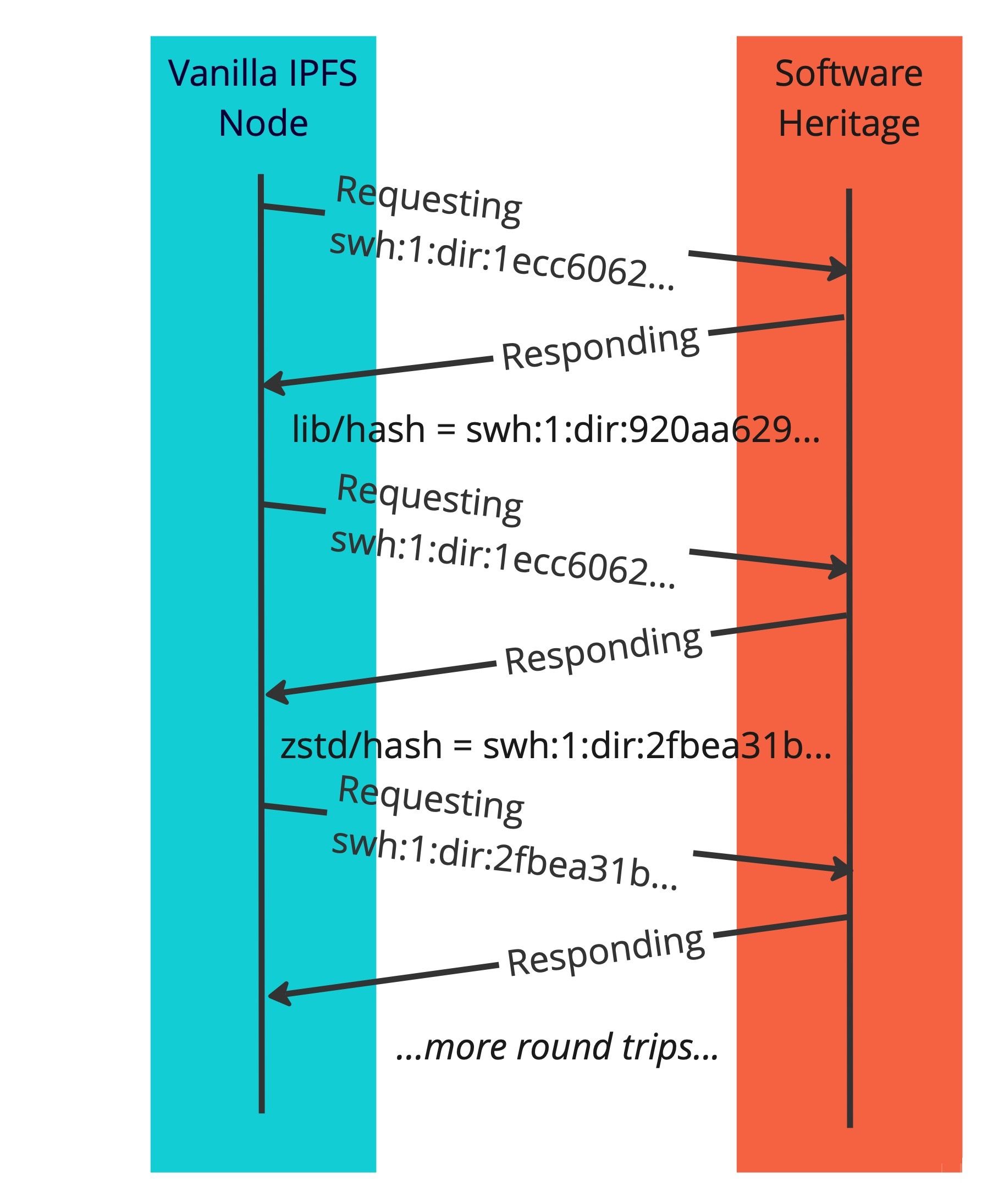

Let’s diagram how this works, eliding the bridge node vs Software Heritage proper details:

Implementation

Now it's hopefully clear how the bridge node is used, but you might still curious how it's implemented. If so, this section is for you!

SWH: a new endpoint

Firstly, we had to augment the SWH API with a new endpoint. Despite SWH using git hashing internally, the API originally targeted users who wanted to access structured objects like directories and snapshots in a higher-level, more friendly manner. We, however, just need the raw bytes. We can decode these ourselves, but it is absolutely necessary that what we receive matches the hash exactly, in order for this process to be tamper-proof and trustworthy.

The new endpoint does just that — gives us the raw bytes — and works on all types of objects in the SWH archive alike.

IPFS: node plugins

Kubo, the main IPFS implementation, is wonderfully extensible, so to implement our bridge, we didn’t need to craft an entirely new IPFS node, only write some plugins.

The main plugin we need will allow us to talk to SWH. IPFS nodes can use local storage, called datastores, for caching, but need not care how those datastores work. Not only can they be configured to use a variety of built-tin different data store types, but also datastore plugins can be written to allow configuring other ways of storing things the IPFS developers need not anticipate. This can be a great way to talk to the Software Heritage archive! The Software Heritage archive may, in fact, be far away on the internet, but it can act like a read-only data store just as if it really was some sort of local storage. This plugin is the heart of the bridge, transforming IPFS’s multihashes into SWHIDs, issuing requests with our new endpoint, and then ferrying the response back to the rest of the IPFS node.

Normally a node’s data store is readable and writable, which allows a node to cache the results of requests. With the SWH heritage archive as a read-only data store, the bridge node cannot write down new things, but it appears as if it has everything in its local storage already! IPFS nodes only respond to requests with data they already have — they don’t re-request based on incoming requests, and this architecture works perfectly for us. A request for objects SWH has the bridge node will respond to, and a request for anything SWH doesn’t have, will just be ignored.

Secondly, while Software Heritage does encode files, directories, commits, and signed tags in the same way as git, it also uses one more type of object that doesn’t correspond to any git type of object. These snapshots objects represent the top-level state of a repo (refs and what they point to). This is information that in a normal “live” git repo is not content-addressed, but is rather a mutable local state. For SWH’s archival purposes, however, not content-addressing the information wouldn’t do, and thus in the form of a new type of object was needed. We used an IPFS-supported IPLD plugin to parse SWH snapshot objects.

Taking a step back, if the goal is to fetch objects from IPFS, you might be wondering why we started talking about parsing them. Can’t we fetch an object by content address without carrying about its bytes mean, treating it as a black box? Yes, we can. And so if fetching single objects was all we wanted to do, there would be no reason to parse snapshots or vanilla git objects. But IPFS can do more than fetch single objects. It also supports some very cool features like recursive querying and pinning. Always exposing the recursive Merkle Directed Acyclic Graph (DAG) structure — that is by parsing our content-addressed objects — we recover the content addresses of other child objects that we can likewise fetch and parse exposes many possibilities that we look forward to explaining in future blog posts.

Future work

Performance and pipelining

At time of writing, an entire source project is not downloaded in a single pass, rather piece by piece. We have to receive a parent object and decode it to figure out what its children are, and then fetch them individually. The problem stems from a lack of pipelining, which causes high latency due to all round trips between a client node and the SWH API required.

To solve this, we need to be able to request more information at once. There is no circumventing the client not knowing what the children are until it knows what the parent is. However, it can still tell the other node it is communicating with that it wants the children too. The other node end can then start sending more information the client didn’t explicitly ask for. IPFS and SWH are already building out support for this independently.

Large objects

IPFS requires chunking, having a small maximum atomic object size. Git however doesn’t chunk, and objects can be arbitrarily big. What to do? One solution is to use cryptography tricks to break up git objects into smaller objects without sacrificing content addressing.

A better long-term solution is to influence Git’s future direction, as the existence of projects like git-lfs demonstrate that even without IPFS, Git’s large object support is subpar. That said, having a stop-gap solution in the meantime is better than none, and ideally could help focus attention on this issue.

In conclusion

Beyond the practical benefits of having this bridge, we hope this project helps promote the practice of content addressing data end-to-end. By prioritizing shared data models over difficult, tedious, or lossy intermediary conversions between data structures, we can avoid a whole class of issues that plague computer infrastructure today. For example, rather than learning to live with tarball compression issues, we could create the means to avoid them.

We are grateful to the NLNet Foundation for the grant that funded this project. Bringing together these software ecosystems was made possible by the close collaboration of ourselves, Software Heritage, and Protocol Labs. We look forward to what the future holds for this collaboration.

Comments ()